Assessing animal behavior with machine learning

Machine learning (ML) is an emerging tool in the field of animal behavior research, offering substantial improvements in the speed, objectivity, and sophistication of behavioral analysis. Traditional methods of analyzing animal behavior often involve manual scoring of observed behaviors, which can be time-consuming and potentially biased. In contrast, ML algorithms can automatically classify behaviors and detect subtle patterns that might be overlooked in manual analysis. Machine learning methods therefore offer the possibility of high-throughput, objective behavioral analysis.

Using machine learning to assess animal behavior. Image generated with DALL-E (sourceꜛ)

Using machine learning to assess animal behavior. Image generated with DALL-E (sourceꜛ)

In a typical machine learning-based behavioral analysis, video footage of animals is processed and analyzed by an ML algorithm that has been trained to recognize specific behaviors. This could involve supervised learning, where the algorithm is trained on labeled examples of different behaviors, or unsupervised learning, where the algorithm identifies patterns in the data without any prior labeling.

The application of machine learning to animal behavior studies has been facilitated by the development of software platforms such as DeepLabCutꜛ and JAABAꜛ. These tools provide user-friendly interfaces for training ML algorithms on new datasets and have been used to study a wide range of behaviors in various species.

Machine learning in a nutshell

Let’s briefly sketch out the basic concepts of machine learning. You can skip this section if you are already familiar with machine learning.

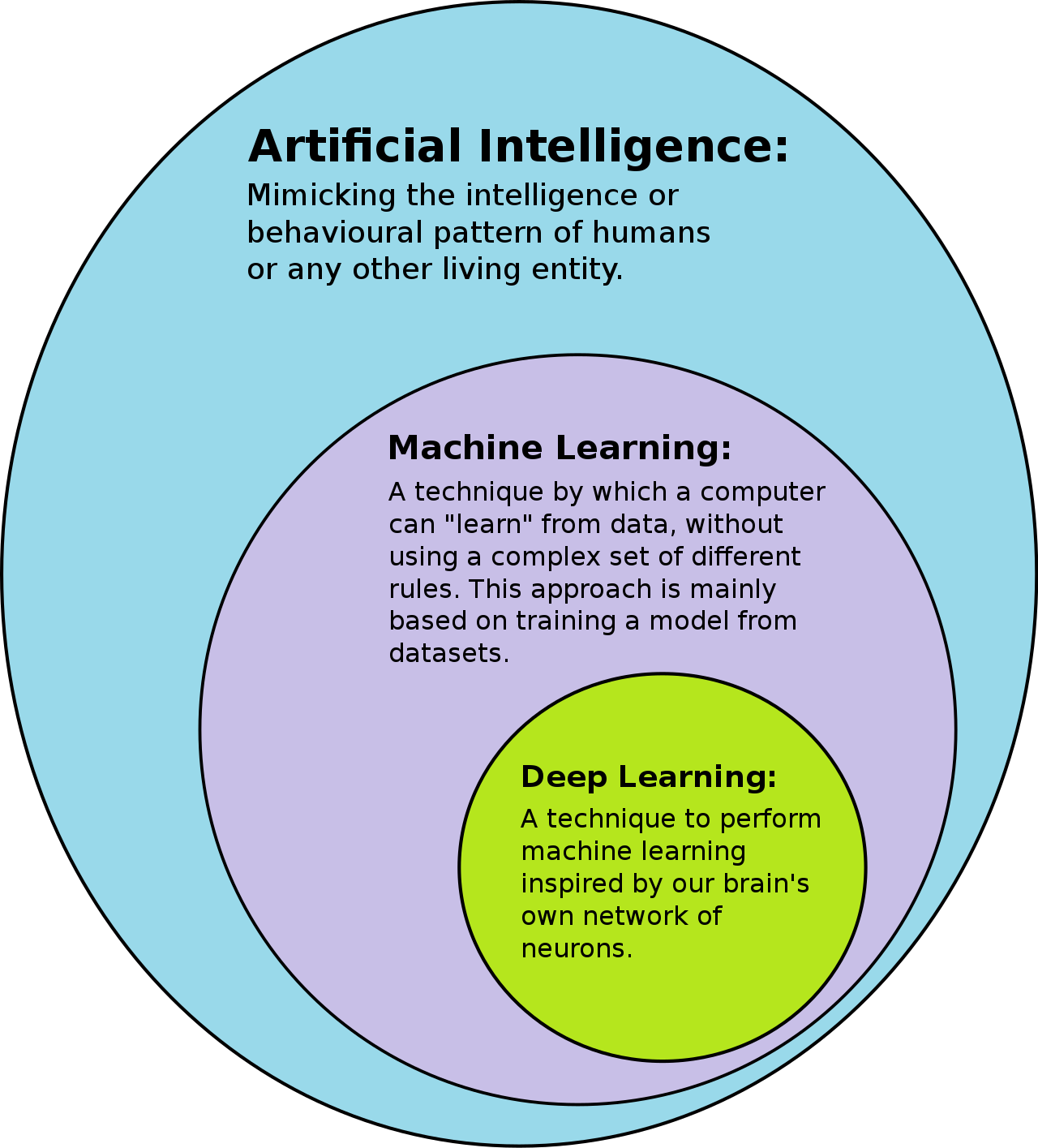

Machine Learning (ML) as a subset of artificial intelligence (AI) is about developing algorithms that can learn from and make decisions or predictions based on data. Instead of being explicitly programmed to perform a task, machine learning algorithms learn patterns in data and make predictions or decisions based on those patterns.

There are three main types of machine learning:

- Supervised Learning: The algorithm learns from labeled data, i.e., data that includes both the input and the correct output. The goal is to learn a mapping function from inputs to outputs.

- Unsupervised Learning: The algorithm learns from unlabeled data, i.e., data without a pre-specified output. The goal is to find structure or patterns in the data, such as grouping or clustering of data points.

- Reinforcement Learning: The algorithm learns by interacting with an environment, receiving rewards or penalties for actions, and learning to optimize for the highest reward over time.

How the term Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL) relate to each other. Source: Wikimediaꜛ (CC BY-SA 4.0 license)

How the term Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL) relate to each other. Source: Wikimediaꜛ (CC BY-SA 4.0 license)

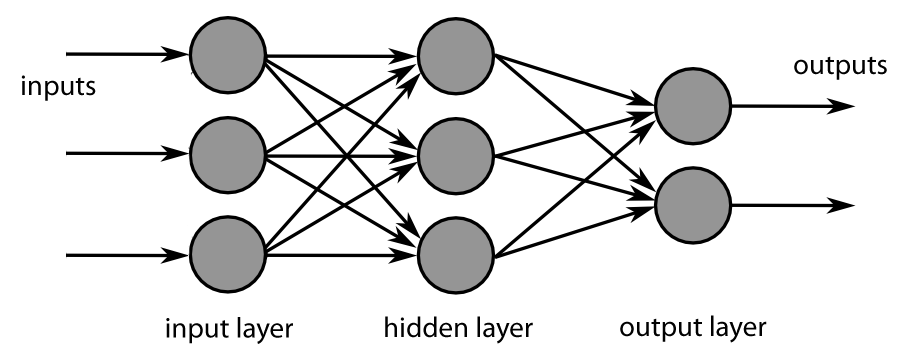

Deep Learning (DL), a subset of machine learning, uses artificial neural networks with multiple layers (hence “deep”) to model and understand complex patterns. Each layer in a deep learning model learns to transform its input data into a slightly more abstract and composite representation, with the final output being the prediction or decision.

Schematic of a simple feed-forward deep-learning network consisting of three layers: the input layer (left) with, in this case, three input neurons, the output layer (right) with the output neurons, in this case two, and a hidden layer (middle), whose neurons are neither inputs nor outputs. More complex networks can consist of multiple hidden layers. Source: Wikimediaꜛ (CC BY-SA 3.0 license)

Schematic of a simple feed-forward deep-learning network consisting of three layers: the input layer (left) with, in this case, three input neurons, the output layer (right) with the output neurons, in this case two, and a hidden layer (middle), whose neurons are neither inputs nor outputs. More complex networks can consist of multiple hidden layers. Source: Wikimediaꜛ (CC BY-SA 3.0 license)

Deep learning has been particularly effective in tasks involving large amounts of unstructured data such as image, audio, and text processing. It can be highly beneficial in assessing animal behavior data due to its ability to process and learn from complex and large-scale datasets. The applications can be quite diverse, ranging from automated animal tracking, to behavior classification and animal health monitoring:

- Animal Tracking and Identification: Deep learning algorithms can be used to automatically identify and track animals in images or videos. For instance, convolutional neural networks (CNNs)ꜛ can be trained to recognize different animals or even individual animals based on their patterns, shapes, and colors. This can significantly reduce the manual labor needed for animal tracking studies and increase the accuracy and consistency of the data.

- Behavior Classification: Animals exhibit a variety of behaviors that might be hard to categorize manually. Recurrent neural networks (RNNs)ꜛ or transformers, which are good at handling sequential data, can be used to analyze time-series data such as movement tracking data or audio signals to classify different types of behavior.

- Behavior and Health Monitoring: Changes in an animal’s behavior often indicate changes in health. Deep learning algorithms can learn to detect these changes from sensor data, video data, or other types of monitoring data, allowing early detection of potential health issues.

If you want to learn more about machine learning, I recommend the book by Ian Goodfellow et al., “Deep Learning” (MIT Press, 2016), which is freely available onlineꜛ.

Examples for ML-based behavioral analysis

In the subsequent discussion, we have highlighted two notable implementations of machine learning-based behavioral assessment. However, it is important to note that there exists a wide array of tools and methods, each specifically designed to address unique research questions. Thus, we strongly encourage to delve into the literature and explore available resources to identify the most suitable tool for specific research needs.

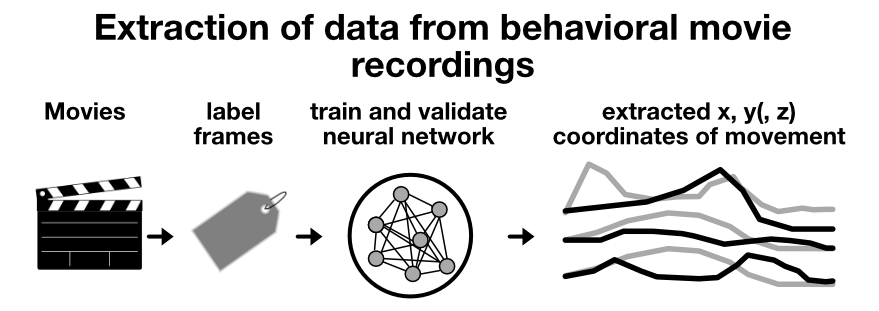

DeepLabCut

DeepLabCutꜛ (click on this link to see some sample movies) is a state-of-the-art deep learning-based tool for markerless pose estimation and tracking in a variety of organisms. It utilizes convolutional neural networks (CNNs) to automatically identify and track key body parts or markers from video footage. This method eliminates the need for manual annotation or tracking of specific features, making it highly efficient and accurate for analyzing complex behaviors Mathis et al. (2018)ꜛ, Graving et al. (2019)ꜛ.

Sketch depicting the extraction of data from behavioral movie recordings.

Sketch depicting the extraction of data from behavioral movie recordings.

When applied to tracking mice, DeepLabCut can precisely monitor the movements and postures of individual animals in various behavioral paradigms. This allows for detailed analysis of locomotion, gait, social interactions, and other relevant behaviors. After a major update in April 2022, DeepLabCut now allows the tracking of multiple animals in a single video recordingꜛ.

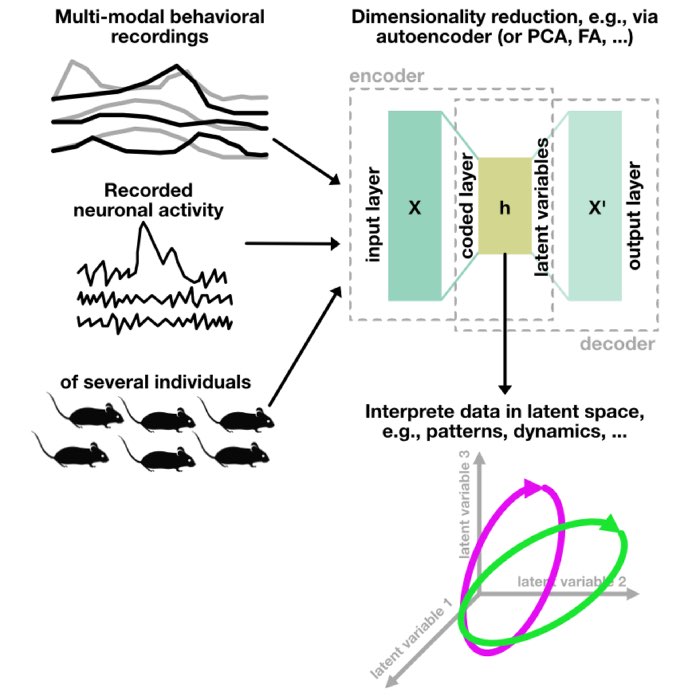

VAME

VAME, which stands for Variational Animal Motion Embedding, is a deep learning method specifically designed for unsupervised behavior segmentation and analysis (Luxem et al. (2022)ꜛ). Its purpose is to automatically discover and categorize spatiotemporal behavioral patterns from pose estimation signals obtained through video monitoring (check out this animation of VAME on GitHubꜛ and the pre-print version of the paperꜛ). VAME helps to uncover underlying behavioral structure and identify distinct motifs within the data. This methodology is particularly valuable for studying the dynamics of animal behavior in a wide range of experimental settings, facilitating investigations into the relationship between behavior and factors such as genetics, disease, and environmental conditions.

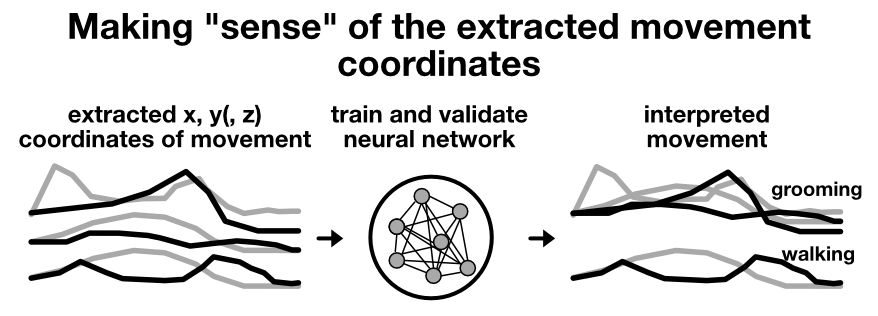

Sketch depicting the “sense-making” of behavioral movie recordings.

Sketch depicting the “sense-making” of behavioral movie recordings.

A comprehensive review on other open-source tools for behavioral video analysis can be found here (Luxem et al. (2023))ꜛ.

Further readings

- Mathis, A., Mamidanna, P., Cury, K. M., Abe, T., Murthy, V. N., Mathis, M. W., & Bethge, M. (2018). DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nature neuroscience, 21(9), 1281-1289. doi:10.1038/s41593-018-0209-yꜛ

- DeepLabCut websiteꜛ

- Kabra, M., Robie, A. A., Rivera-Alba, M., Branson, S., & Branson, K. (2013). JAABA: interactive machine learning for automatic annotation of animal behavior. Nature methods, 10(1), 64-67. doi:10.1038/nmeth.2281ꜛ

- Pereira, T. D., Aldarondo, D. E., Willmore, L., Kislin, M., Wang, S. S. H., Murthy, M., & Shaevitz, J. W. (2019). Fast animal pose estimation using deep neural networks. Nature methods, 16(1), 117-125. doi:10.1038/s41592-018-0234-5ꜛ

- Atika Syeda, Lin Zhong, Renee Tung, Will Long, Marius Pachitariu, Carsen Stringer (2022), Facemap: a framework for modeling neural activity based on orofacial tracking, bioRxiv 2022.11.03.515121; doi: doi.org/10.1101/2022.11.03.515121ꜛ

- Graving, J. M., Chae, D., Naik, H., Li, L., Koger, B., Costelloe, B. R., … & Kording, K. P. (2019). DeepPoseKit, a software toolkit for fast and robust animal pose estimation using deep learning. eLife, 8, e47994. doi:10.7554/eLife.47994ꜛ

- Kevin Luxem Jennifer J Sun Sean P Bradley Keerthi Krishnan Eric Yttri Jan Zimmermann Talmo D Pereira Mark Laubach (2023), Open-source tools for behavioral video analysis: Setup, methods, and best practices, eLife 12:e79305, doi.org/10.7554/eLife.79305ꜛ

- Luxem, K., Mocellin, P., Fuhrmann, F. et al. Identifying behavioral structure from deep variational embeddings of animal motion. Commun Biol 5, 1267 (2022). doi.org/10.1038/s42003-022-04080-7ꜛ

- Kevin Luxem, Petra Mocellin, Falko Fuhrmann, Johannes Kürsch, Stefan Remy, Pavol Bauer (2022), Identifying Behavioral Structure from Deep Variational Embeddings of Animal Motion bioRxiv 2020.05.14.095430; doi.org/10.1101/2020.05.14.095430ꜛ

- Ian Goodfellow, Yoshua Bengio, and Aaron Courville (2016), Deep Learning, MIT Press, http://www.deeplearningbook.orgꜛ